You ain’t so good, Google Neural Net

Google recently launched an interactive web game to train its neural network to recognise objects. The game, Quick Draw, calls on human users to draw a prompted object within a short period of time and the machine tries to guess what it is based on what it has learned so far from all of the inputs of previous players. Quite ingenious, to crowdsource training a machine learning (ML) program since many people are always looking for an excuse not to do work.

I wanted to test its learning limits. I more or less had a sense of how previous inputs for the prompts would look like, since humans tend to draw objects similarly when under time pressure. I wondered if I drew all of the objects from a different perspective, would the program still recognise it as the object — a task which humans are very capable of?

The answer is: not really.

I experimented with drawing in a sequence that would not be obvious what the object is immediately, but the end product would be discernibly apparent. I experimented with odd and skewed perspectives. Google Neural Net failed most of the time.

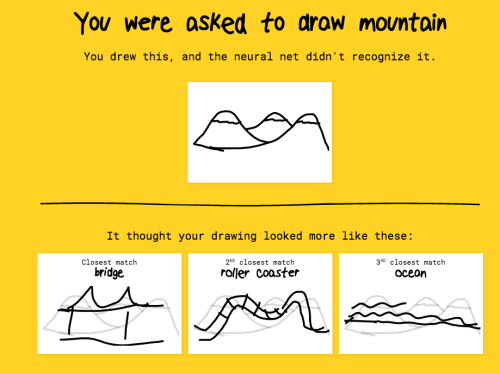

I think my mountains were really good! I started with a skewed line to not trigger immediate “mountain” responses from Neural Net and then quickly added half lines but by the time I was done, any human would have seen that these are really good mountains.

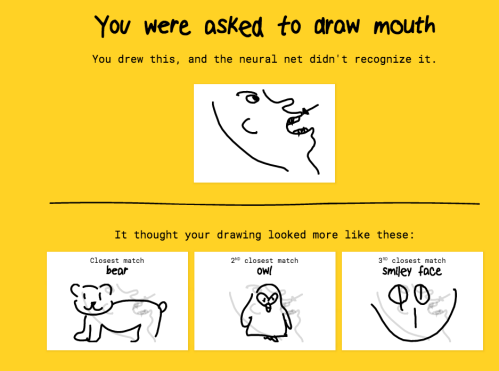

I thought it was cute that my mouth were interpreted as a bear, an owl and a smiley face. What?? I started with the top line forming the nose, lips, mouth, chin and neck, followed by the back of the head. I filled in details and drew an arrow pointing to the mouth. In Neural Net’s fairness, its creators probably never accounted for it to learn the concept of pointing — a task that I don’t think is too difficult given how far we’ve come along in ML. It seems Neural Net has really only been learning to identify objects by scanning them as a whole.

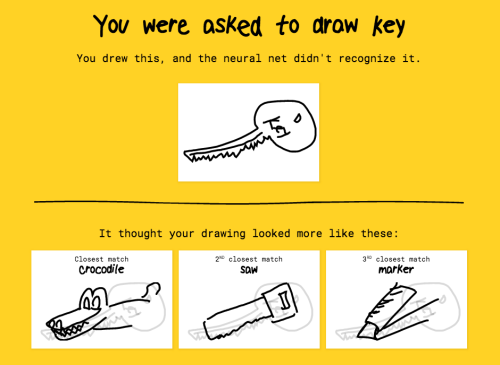

I drew a jagged tooth key, instead of a wedge-end key because I thought it’d be too obvious. By the time I finished the key, Neural Net still hadn’t recognized it. I had some time left and literally drew in the words “KEY” hoping it’d help Neural Net along but noooope. It thought it to be a crocodile. Cute croc though.

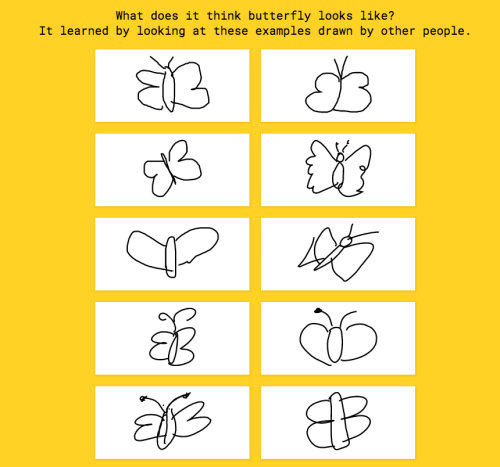

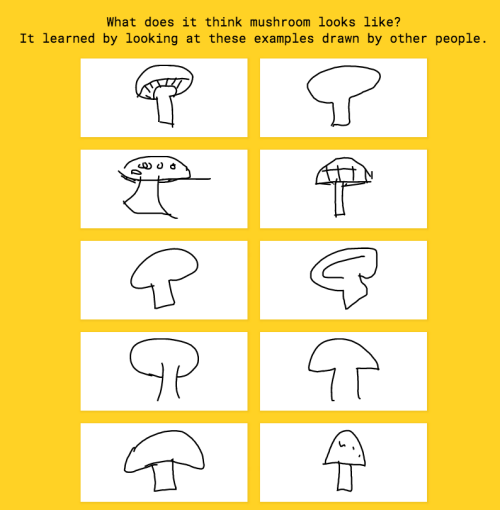

Looking at what examples Neural Net uses as its learned base to pass judgment, one sees that humans tend to draw things either profile or head-on, and hence how Neural Net learns to identify objects.

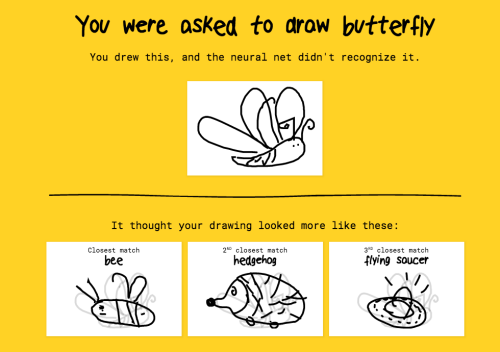

Come on. My butterfly was clearly the best butterfly all of Neural Net’s learned examples.

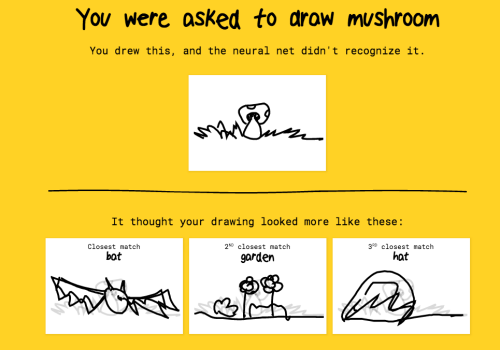

How are some of your examples even mushrooms!? They look more like penises! I declare my mushroom to be mushroomier than your learned examples!

Google Neural Net, it seems you have a long way to go.

Bonus pic from a friend:

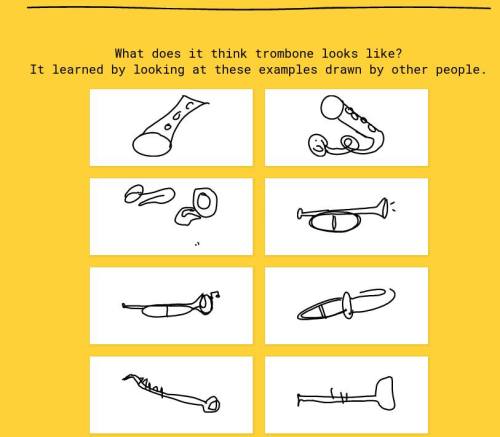

COME ON. In what universe are these trombones?? I’m starting to think people have never seen what a trombone looks like.